Unveiling the Truth: Is OpenAI SORA Hiding Secrets?

In the rapidly evolving landscape of artificial intelligence, OpenAI has again stirred the pot with the launch of Sora, their innovative text-to-video AI tool. while the internet buzzes with excitement over this new offering, a deeper examination reveals a web of complexities and unanswered questions that go beyond the surface-level hype. What has been conspicuously absent from the promotional content? This blog post aims to unravel the intriguing layers surrounding Sora, revealing not just the capabilities but also the concerns that have emerged since its debut.

From compliance challenges to regional limitations, and artistic backlash to the nuances of generated content, Sora seems to carry the weight of both promise and contention. As early reviewers like Marques Brownlee have illuminated, the tool’s outputs evoke a spectrum of reactions, from the breathtaking to the unsettling. Join us on this exploration as we dig into the hidden narratives behind Sora’s rollout — the potential implications for content creation, ethical considerations surrounding AI-generated material, and the broader discourse shaping the future of artificial intelligence. Let’s venture beyond the headlines and uncover what OpenAI might not be saying about Sora.

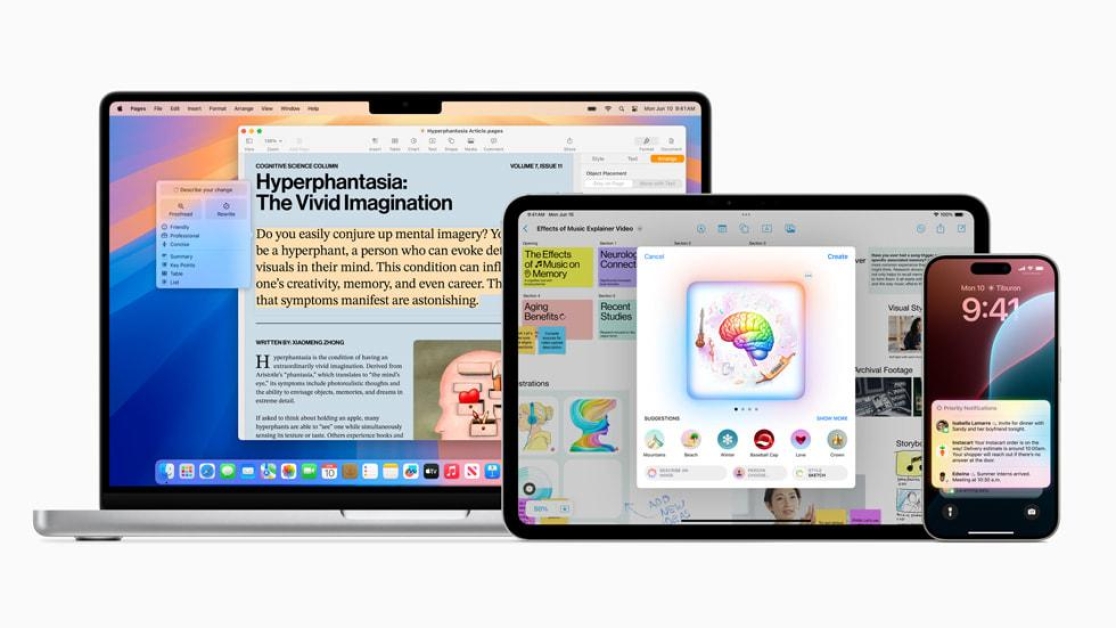

Exploring the Intricacies of OpenAI SORA’s Launch and Accessibility

OpenAI’s SORA has entered the scene with a buzz, but its launch raises more questions than answers. Users in regions such as the UK, Switzerland, and the European Economic Area currently find themselves excluded from accessing this groundbreaking text-to-video tool. This isn’t just a mere oversight; reports suggest that openai is grappling with complex compliance challenges related to stringent data protection laws and the Digital Services Act in Europe. As our digital landscape grows more intricate, ensuring that AI technologies meet regulatory standards is a hefty undertaking. OpenAI’s internal compliance teams are reportedly in a scramble to navigate this legal minefield, highlighting that the deployment of innovative technologies frequently runs parallel with ethical considerations and legal hurdles.

Moreover, the skepticism surrounding SORA has sparked notable dialogue. Notable reviewers have provided mixed feedback, describing the outputs as both “horrifying and inspiring.” While the tool can generate stunning cinematic landscapes and artistic effects, criticisms have emerged regarding its potential for deep fakes and misinformation.OpenAI has attempted to mitigate these risks by introducing visible watermarks and C2P metadata to mark AI-generated videos. However, some critics claim these measures might not go far enough, suggesting that technical limitations can easily be bypassed as more users engage with the platform. The dichotomy between innovation and responsible AI use intrigues technologists and regulators alike, serving as a poignant reminder that with great power comes great obligation.

Delving into Regulatory Challenges and Compliance Quagmires

As OpenAI launches SORA, a wave of excitement and curiosity sweeps through the digital landscape. However, beneath the glossy surface of promotional materials lie significant regulatory hurdles and compliance challenges that could impede its global rollout. among these challenges, data protection laws stand out prominently, notably in regions like Europe where strict regulations govern digital services. OpenAI’s internal compliance teams are reportedly engaged in a complex struggle to ensure that SORA adheres to local legal standards, navigating a minefield of ethical questions surrounding the potential misuse of AI technologies, including the creation of deep fakes and disinformation. These compliance issues complicate SORA’s availability, leaving users in specific regions, including the UK and Switzerland, unable to access the tool while OpenAI diligently works to address these concerns.

Moreover, the implications of such regulatory constraints amplify the scrutiny surrounding SORA’s functionalities. Despite the innovative capabilities that allow users to create visually stunning content, critics are raising alarms about the effectiveness of the implemented safeguards. While OpenAI has promised to block particularly harmful outputs,including sexual deep fakes,the reality is more complex. Digital forensics experts warn that as soon as these protections are established, clever individuals may soon discover ways to circumvent them. The challenge for OpenAI is not merely about introducing new tools,but about ensuring thay operate within a framework that prioritizes responsible usage. In this evolving landscape, the balance between innovation and compliance becomes ever more critical, as both users and regulators question the ethical ramifications of powerful AI tools.

Unpacking Quality Concerns and Artistic Limitations in AI-generated Content

There is a stark divergence between the impressive aesthetics and the practical limitations of AI-generated content like Sora. while reviewers have acknowledged the tool’s ability to produce stunning visuals that evoke a sense of wonder, such as lush cinematic landscapes and intricate stylized scenes, there remain significant concerns regarding realism. Critics point out that the physics and coherence within generated videos can sometimes be strikingly off-kilter. For instance, phenomena such as gravity may not behave as expected, leading to outputs that can feel jarring or unrealistic. This raises the question of whether Sora can truly bridge the gap between artistic inspiration and practical applicability, particularly in serious contexts where visual fidelity is paramount.

Moreover, Sora’s regional availability and compliance challenges have sparked debates about accessibility and ethical responsibility. openai’s decision to restrict the tool’s rollout in certain regions, including the UK and switzerland, highlights the complexities involved with data protection laws and the potential for misuse, especially concerning deep fakes and disinformation. While the integration of measures like visible watermarks aims to maintain transparency, critics fear that these safeguards may prove inadequate in preventing exploitation. The overall sentiment among observers indicates an underlying tension between innovation and oversight,questioning whether the rapid advancement of AI technology can keep pace with the necessary ethical frameworks that govern its use.

Addressing Ethical Implications and user Safety Measures in Video AI Technology

The rapid evolution of video AI technology brings with it a complex tapestry of ethical implications that require careful consideration. Users are often unaware of the potential risks associated with such tools, especially when it comes to deep fakes and disinformation. OpenAI’s Sora, while promising revolutionary advancements in AI-generated video, has not fully addressed how it plans to mitigate these risks effectively. Concerns have been raised about the access and use of sensitive content generated by AI, particularly in regions with strict data protection laws.the ongoing challenges with complying with legislation, especially in Europe, highlight the delicate balance that technologists must strike between innovation and regulation. The implications of these technologies extend beyond mere functionality; they touch on basic social issues such as privacy, consent, and potential misuse. Ensuring user safety should be a prioritized endeavor,thus preventing adverse outcomes that could arise from its use.

Furthermore, OpenAI has implemented several user safety measures to combat malicious use of Sora. These include blocking particularly damaging forms of content, such as sexual deep fakes, and incorporating metadata and visible watermarks in AI-generated videos.While these measures are a step in the right direction, critics argue that they may fall short in practice. The adaptive nature of digital manipulation poses an ongoing challenge; as technology improves, so too do the methods of those intent on circumventing safeguards. therefore, it is crucial that stakeholders not only implement but also continually update and refine safety protocols in response to emerging threats. A proactive approach is essential for fostering a trustworthy environment where AI can be harnessed for creativity, without compromising ethical standards and user safety.

Wrapping Up

Outro:

as we draw the curtain on our exploration of OpenAI’s latest innovation, Sora, it’s clear that while the excitement surrounding this text-to-video tool is palpable, deeper questions linger just beneath the surface. We’ve dissected the features that make Sora a game-changer, examined the barriers to access that leave many prospective users out in the cold, and confronted the ethical dilemmas that arise in an age of AI-generated content. The contrasting sentiments voiced by early reviewers, like Marquez Brownlee, remind us that progress isn’t always a straightforward path; it can be both awe-inspiring and cause for concern.

ultimately, the thrill of innovation brings with it an obligation for transparency and a commitment to responsible use. As we navigate the ever-evolving landscape of artificial intelligence, it’s crucial to stay informed about the potential implications of tools like Sora—not just for creators, but for society at large.Will OpenAI address the regulatory challenges head-on and improve upon their initial offerings to meet the diverse needs of users globally? Only time will tell.

Thank you for joining us on this insightful journey. stay curious, stay informed, and let’s continue to ask the tough questions together. Until next time,keep seeking the truth behind the buzz!